Posted by Simon Long Dec 3, 2020

Getting Started With Oracle Cloud VMware Solution (OCVS) – Networking Configuration

In my recent ‘Getting started with Oracle Cloud VMware Solution (OVCS)’ post; Getting Started With Oracle Cloud VMware Solution (OCVS) – Deploying The SDDC With HCX we deployed ourselves a Software-Defined Data Center (SDDC) along with VMware HCX into Oracle Cloud.

Posts in this series:

- Deploying A Bastion Host

- Deploying The SDDC With HCX

- Deployment Overview

- Networking Configuration

- Connecting To Oracle Cloud Infrastructure Services

- Connecting To An On-Premises Environment

- Migrating Workloads Using VMware HCX

In this post, I’m going to review the overall networking configuration, including NSX-T.

ESXi Host ‘Oracle Cloud’ Connectivity

First, let’s take a look at how the ESXi Hosts are connected to the Oracle Cloud infrastructure.

- Login to the OCVS console

- Select the correct Region. (This should be the same region that the SDDC and the Bastion host were deployed)

- Click on the burger icon at the top left of the screen to display the menu

- Scroll down on the left-hand side menu and select VMware Solution

- Select the name of your newly deployed SDDC

- Scroll down to the ESXi Hosts section

- Select one of the ESXi Hosts (Compute Instance column)

- Scroll down to the Metrics section

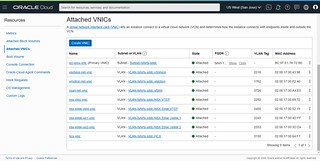

- Select Attached VNICs on the Resources menu (left-hand side of the page)

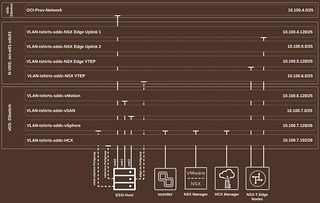

Here we can see virtual network interfaces, Subnets, and VLANs that are attached to the ESXi Host. The following diagram illustrates a single ESXi Host’s connectivity to the various VLANs deployed as part of the SDDC configuration. As we go through the networking configuration, the diagram will begin to make more sense.

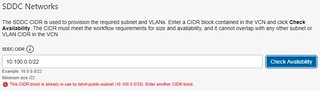

Subnets

A VCN Subnet consists of a contiguous range of IPv4 addresses that do not overlap with other subnets in the VCN. So if you were to deploy a second SDDC using the same VCN, you would have to specify a different CIDR block. If you try and use the same CIDR block that is used by another SDDC you’ll receive an error.

There is a single Subnet deployed as part of the SDDC configuration.

| Subnet-tshirts-sddc | This Subnet is where the ESXi Host Management vNICs (vmk0) reside. It is also used, as the name suggests, during the provisioning of the ESXi Hosts |

VLANs

A VLAN is an object within a VCN. VLANs are used to partition the VCN into Layer2 broadcast domains. Each VLAN has a Route-Table associated with it. The Route Table is responsible for the traffic forwarding to a specific destination. In addition to the Route Table, each VLAN has a Network Security Group or Security Rules (Firewall rules) associated with it. These Network Security Groups function in the same way as a Firewall, allowing and denying traffic in and out of the VLAN.

NOTE: When manually creating a new VLAN or Subnet, all traffic is denied by default. Rules will need to be added to allow traffic to flow.

Each VLAN within the VCN is automatically assigned a VLAN ID. These VLAN IDs are only local to the VCN, so there might be cases where you deploy an SDDC in another AD or Region and the same VLAN ID is used. Even though they might share the same VLAN ID, it’s important to understand that they are not the same VLAN.

The following VLANs are connected to each ESXi Host and are used throughout the deployment.

| VLAN-tshirts-sddc-vSphere | This VLAN is regularly called ‘Management Network’ in on-premises environments. This is where vCenter, NSX-T management, HCX management, and NSX-T Edges live (Not ESXi host vmk0) |

| VLAN-tshirts-sddc-vSAN | This VLAN dedicated to vSAN traffic |

| VLAN-tshirts-sddc-vMotion | This VLAN dedicated to vMotion traffic |

| VLAN-tshirts-sddc-NSX VTEP | This VLAN is used the ESXi Host TEPs (Tunnel Endpoints) where NSX-T overlay traffic (Geneve encapsulated) will flow East-West between the ESXi Hosts |

| VLAN-tshirts-sddc-NSX Edge VTEP | This VLAN is used for the NSX-T Edge TEPs sending Geneve encapsulated traffic between the NSX-T Edges and the ESXi Hosts |

| VLAN-tshirts-sddc-NSX Edge Uplink 1 | This VLAN is used for North-South communication between the SDDC, the native Oracle Cloud services and the internet |

| VLAN-tshirts-sddc-NSX Edge Uplink 2 | This VLAN is not currently used at this time |

| VLAN-tshirts-sddc-HCX | Used for VMware HCX traffic |

You can drill down into each VLAN by clicking on the VLAN name.

- Click VLAN-<YOUR SDDC NAME>-vSphere

Here we can see some additional information about the VLAN. By default, the vSphere VLAN has External Access configured for the vCenter (vcenter-vip), the NSX-T Management Cluster Virtual IP (nsxt-manager-vip), and the HCX Manager (hcx-manager-ip1). The External Access Type configured for all of these components is Route Target Only. This assigns an IP address that can be used as a route target on the VLAN. Meaning, traffic from ‘other’ VLANs can access these IP addresses. This is how we are able to access vCenter, NSX-T, and HCX from our Bastion host network. In addition to the External Access information, we can also see what Route Table and Network Security Group (Firewall) are associated with the VLAN.

- Click Route Table For <YOUR SDDC NAME>-vSphere

The Route table for the vSphere VLAN specifies the NAT Gateway (tshirts-ngw) as the Default Gateway for all traffic on this VLAN. This allows the virtual machines on this VLAN to access the internet if allowed in the Security Rules.

- Click NSG For <YOUR SDDC NAME>-vSphere

There are many Security Rules added to the Network Security Group (Firewall). These rules are automatically configured to allow all of the components of the SDDC and HCX to communicate between each other and out to the internet. Feel free to review each of the individual rules to understand what traffic flows where. It might help you sleep at night.

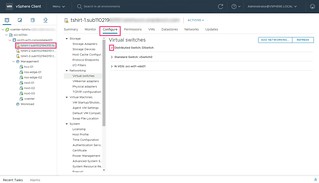

vSphere Virtual Portgroups

Let’s take a look a the virtual networking configuration inside vCenter.

- Click on the burger icon at the top left of the screen to display the menu

- Scroll down on the left-hand side menu and select VMware Solution

- Select the name of your newly deployed SDDC

- Copy the vSphere Client URL

- Paste the vSphere Client URL into a new browser tab

- Copy/Paste the vCenter Initial Username and vCenter Initial password to log in to vCenter

Once you are logged into vCenter:

- Click Menu in the vSphere client and select Networking on the dropdown menu

Here you can see a mixture of Distributed Virtual Switch (vDS) Portgroups, NSX-T Distributed Portgroups (N-VDS), and standard Portgroups. You should notice that these Portgroups names are very similar to our SDDC VLANs that we reviewed earlier.

Virtual Distributed Switch (vDS) portgroups

| vds01-vSphere | vSphere VLAN |

| vds01-vSAN | vSAN VLAN (ESXi Host vmk2 lives here) |

| vds01-vMotion | vMotion VLAN (ESXi Host vmk1 lives here) |

| vds01-HCX | HCX VLAN |

| Management Network | This network is used ONLY by Oracle during their deployment process and is routed to allow communication with the vSphere VLAN (ESXi Host vmk0 lives here) |

NSX-T Distributed Virtual Switch (N-VDS) portgroups

| edge-ns | NSX Edge Uplink 1 VLAN |

| edge-transport | NSX Edge VTEP VLAN |

| workload | This is the NSX-T segment for our virtual machines that we specified during the configuration of the SDDC |

Standard vSphere portgroups

| VM Network | This is deployed as part of the NSX-T deployment and is not used |

ESXi Host ‘vSphere’ Connectivity

Now let’s take a look at the ESXi Host connectivity from within vCenter. Even though we have already taken a look at the ESXi Host connectivity within the Oracle Cloud interface earlier in this post, it might help clarify things by looking at it within vCenter, where we are likely more familiar.

- Click Menu in the vSphere client and select Hosts and Clusters on the dropdown menu

- Select your host named <hostprefix>-1 (i.e tshirt-1.<subnet>.<domain>)

- Select the Configure tab

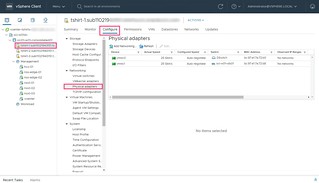

- Select Physical adapters

At the time of writing, the hardware configuration of the physical ESXi hosts (BM.DenseIO2.52) used for the OCVS SDDC have 2x 25Gbit/s physical network cards.

| vmnic0 | Assigned to the Distributed Virtual Switch (DSwitch) |

| vmnic1 | Assigned to the NSX-T Distributed Virtual Switch (oci-w01-vds01) |

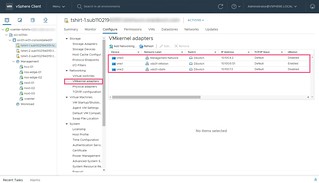

- Select VMkernal adapters

There are three VMkernal adapters configured by default as you might typically find in an SDDC deployment.

| vmk0 | Added to the Management Network vDS and configured to be used for ESXi management traffic |

| vmk1 | Added to the vds01-vMotion vDS and configured to be used for ESXi vMotion traffic |

| vmk2 | Added to the vds01-vSAN vDS and configured to be used for ESXi vSAN traffic |

- Select Virtual switches

- Click the down arrow next to Distributed Switch: DSwitch to display all three Virtual Switches

Here was can see the three different types of virtual switches configured on the ESXi Host that we identified earlier.

- Distributed Switch: DSwitch

- Standard Switch: vSwitch0

- N-VDS: oci-w01-vds01

- Click the chevron icon (>) next to Distributed Switch: DSwitch to expand the switch

Here we are able to see the portgroups configured within the vDS and the uplinks used for the vDS. In this case, we have a single uplink port.

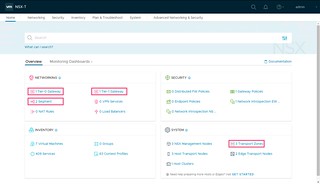

NSX-T Configuration

NSX-T is automatically deployed and configured for us when we deploy the SDDC. However, on-going management and configuration of NSX-T will be required if you need to add additional workload segments into the environment in the future.

- Click on the burger icon at the top left of the screen to display the menu

- Scroll down on the left-hand side menu and select VMware Solution

- Select the name of your newly deployed SDDC

- Copy the NSX Manager URL

- Paste the vSphere Client URL into a new browser tab

- Copy/Paste the NSX Manager Initial Username and NSX Manager Initial password to log in to NSX Manager

At this point, we have a default configuration that includes a segment for our workloads network (192.168.1.0/24) which we requested in the SDDC deployment wizard.

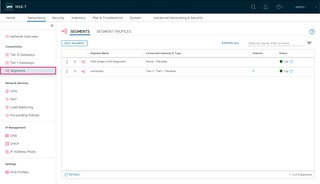

NSX-T Segments

- Click on Segments

There are two Segments configured in our environment.

| NSX-Edge-VCN-Segment | This segment is used for connectivity between the two Edge nodes |

| workload | This segment is used for the workload network (192.168.1.0/24) that we specified during the deployment of the SDDC (You may not have this segment if you did not choose to deploy a workload network) |

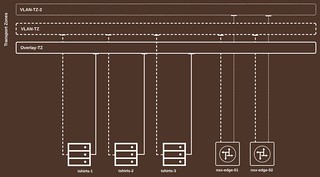

NSX-T Logical Routers

There are two logical routers, Tier-0, and Tier-1.

| Tier-0 | Connected to the NSX Edge VTEP VLAN which allows Geneve encapsulated traffic to flow between the Edge nodes and ESXi Host, and the NSX Edge Uplink 1 VLAN which will allow traffic in and out of the environment |

| Tier-1 | Connected to the workload segment where our virtual machines will live. As we add additional segments, these will be connected to the Tier-1 |

NSX-T Transport Zones

There are three Transport Zones configured.

| Overlay-TZ | An Overlay Transport Zone which includes all ESXi Hosts and both NSX-T Edges and is used by the workload segments. |

| VLAN-TZ | A VLAN Transport Zone which includes all ESXi Hosts and both NSX-T Edges and is used by the edge-ns and edge-transport logical switches |

| VLAN-TZ-2 | A VLAN Transport Zone which only includes both NSX-T Edges and is used for Edge-to-Edge Geneve encapsulated traffic over the NSX-Edge-VCN-Segment segment |

Networking Summary

Now that we have taken a look at the overall networking configuration of the SDDC after initial deployment, hopefully, you have a better understanding of how everything is connected together. The following image illustrates how all of the components that make up the OCVS SDDC environment are connected. In future posts, we are likely to start to add additional networking configurations based on our use cases.

If you want to read some additional content I highly recommend the following blog posts and websites that have helped me to understand how this all fits together:

- L2 Networking with Oracle Cloud VMware Solution

- Oracle Cloud VMware Solution – Networking Quick Actions Part-1: Overview

- Oracle Cloud VMware Solution – Networking Quick Actions Part-2: Connectivity to On-prem Networks

- Oracle Cloud VMware Solution – Networking Quick Actions Part-3: Connectivity to Oracle Services Network

- Oracle Cloud VMware Solution – Networking Quick Actions Part-4: Connectivity to VCN Resources

- Oracle Cloud VMware Solution – Networking Quick Actions Part-5: Connectivity to Internet Using NAT Gateway

- https://notthe.blog/